Kunihiro Tada, Mindware Research Institute

We have already demonstrated in the Innovation Map project that embedded vectors can be generated from product description texts for IT and data science products, which can then be used in data mining methods to create very sophisticated positioning maps. Taking this idea further, areas where qualitative analysis has been done in the past can be turned into elaborate data analysis. Further, in the area of sensory evaluation analysis of food, beverages and flavourings, this will lead to a solution to the fundamental problem that until now data analysis has been carried out by forcibly quantifying aroma, taste and texture, which cannot be measured directly.

All thanks to Embedding Vectors

This idea is so innovative, and many people are still unaware of it, that it is unlikely that anyone will read this article and move to obtain exclusive rights, but the essence of the idea is that ‘meaning’ is computable when using embedding vectors, so it is now considered to be public knowledge. It is considered to be. Therefore, we are happy to share with you what is possible when combining LLM with data mining techniques.

Research activities include qualitative and quantitative research. It is generally understood that qualitative research provides a rough understanding of the overall problem structure, which can then be refined through quantitative research, ultimately leading to more concrete decision-making. While qualitative research can flexibly gather a wide range of ill-defined information through literature and interviews, there is an impression that ambiguity is always attached to it. Quantitative research (e.g. questionnaires), on the other hand, is less flexible and may miss important information, as it quantifies results but accepts nothing but standardised information. Think tanks, research companies, management consultants and other industries have made efforts to compensate for the shortcomings of both by conducting both qualitative and quantitative research.

LLM and text-embedded vectors are likely to upend this perception. What is happening in the world today is a breakthrough in the ability to quantitatively analyse ‘unstructured qualitative information’, which previously could not be analysed quantitatively. This allows for more precise analysis of dense and useful concepts previously lying dormant in qualitative information.

Semantic data mining case study: sensory vector analysis

Sensory evaluation analysis in the food, beverage and flavour industry attempts to quantify and analyse internal experiences such as aroma, taste and texture. At the moment, it is not possible to measure these directly, so specially trained experts called sensory evaluators quantify items such as sweetness, pungency, bitterness, saltiness… of the product and analyse the data together with the consumer’s preference for the product. However, this elemental reduction approach has its limitations, as aromas, tastes and textures are very complex.

To be precise, it is not that elemental reduction is bad, but that traditional analysis methods cannot capture complex phenomena because the number of decomposed elements is too small. So using embedding vectors, the meaning of a text can be decomposed into a thousand or several thousand dimensions, which is ‘semantic decomposition analysis’, or hyper-multivariate analysis. This allows far more complex analysis than the multivariate analysis used in traditional text mining.

We have abandoned the conventional quantification of ‘sweetness is 1.2, pungency is 0.5…’ and have decided to adopt rich expressions such as those presented by TV personalities in food reports. The ‘sensory vector analysis’ introduced here is an attempt to utilise the subtleties of information that have been truncated up to now without omission. As an example, 20 fictitious crisps were prepared. The tasting experience of each crisp is expressed in rich natural language as follows:

- Classic Sea Salt

The golden brown chips sparkle as they catch the light, with each piece evenly coated in fine sea salt crystals. Their appearance is simple yet inviting, a classic look that promises comfort and satisfaction. As you bring the bag closer, the subtle aroma of freshly fried potatoes greets your nose, bringing with it a wave of nostalgia. The scent is warm and earthy, evoking memories of seaside fries and home-cooked meals.

The first bite is a revelation in texture. The crunch is loud and crisp, yet the chip retains a tender quality that melts into your palate. The flavor is uncomplicated but bold—a pure, unadulterated potato taste elevated by just the right amount of sea salt. As you chew, the saltiness intensifies, perfectly balancing the natural sweetness of the potato. The aftertaste is clean and refreshing, leaving you with a craving for just one more chip—an urge that’s hard to resist.

(Full text here)

Although it is possible to analyse Japanese texts, LLMs are more native to English, so in this case analysis is carried out using English texts. First, these texts are converted into embedded vectors using LLM. The experiment uses the OpenAI API to obtain vectors with 1536 dimensions.

Human cognitive abilities cannot interpret vectors with 1536 dimensions, so the dimensions have to be reorganised through multivariate analysis. This process can be referred to as semantic data mining. Methods that can be used include principal component analysis (PCA), t-SNE, UMAP and self-organising maps (SOM).

A detailed description of the individual methods is beyond the scope of this section; PCA is the most common method, but dimensionality reduction is not very efficient, as a significant number of factors (dimensions) must be used to obtain sufficient accuracy when dimensionality reduction is used for supermultiple dimensions, such as 1536 dimensions with PCA. However, if the number of cases (number of texts) is small, the final number of dimensions will be small no matter which method is used, so PCA may be useful for selecting a relatively affordable number of dimensions (for human interpretation).

t-SNE and UMAP are recently popular methods for efficient dimension reduction. If the number of cases is sufficiently large, UMAP is convenient to choose any number of dimensions. Moreover, UMAP is so efficient that it can reduce 1536 dimensions to three and still retain the proximity relationships between cases quite accurately. Finally, humans can only recognise images in two dimensions, but to understand richer meanings, it seems to be a good idea to represent them in a moderate number of dimensions (10 to a dozen).

SOM can also be seen as a dimensionality reduction method, but we propose it as a method for displaying the results of the above dimensionality reduction: in the case of SOM, the dimension itself is not reduced, but a two-dimensional free surface composed of discrete nodes is modelled in a multi-dimensional data space. so the resulting map retains the original dimensional information of the input data. If you want to see information in multiple dimensions at the same time, SOM is definitely more convenient than 3D visualisation. However, super-multi-dimensional abstract numbers, such as embedded vectors, are impossible for humans to interpret and must be used in conjunction with other dimensionality reduction methods.

It is also important to bear in mind that SOM is an algorithm that matches a node with each data point and the node closest to the data point is the ‘winner’. The implication of this is that it does not directly take into account the proximity relationship between data points. In many cases, the result is that similar data records will still belong to the same node. It is a little more difficult to explain, but in the case of hyper-multidimensional data, it can happen that data records belonging to the same node in the SOM are not so homogeneous.

Therefore, in the case of hyper-multidimensional data, it seems just right to reduce the number of dimensions to a reasonable number using another method (e.g. UMAP) that takes into account the proximity relationships between data points before visualising them in the SOM. This view may not be very pleasant for SOM experts, but from a neutral standpoint, this is a pragmatically wise decision. Of course, it is also possible to train a SOM directly from the embedding vectors, in which case we can associate the UMAP values to the SOM (with weight 0) for interpretation.

Another drawback of SOM that needs to be pointed out is that SOM models the input data and does not represent any space outside of it. This means that in the case of product planning, similar relationships between existing products can be well represented, but if you want to plan a product that is completely different from them, it is difficult to explore the space outside of the existing product range. There are several possible remedies for this.

Interpretation of factors by LLM

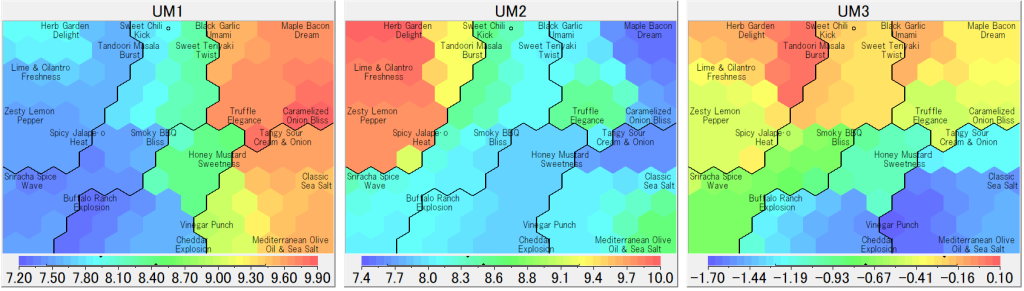

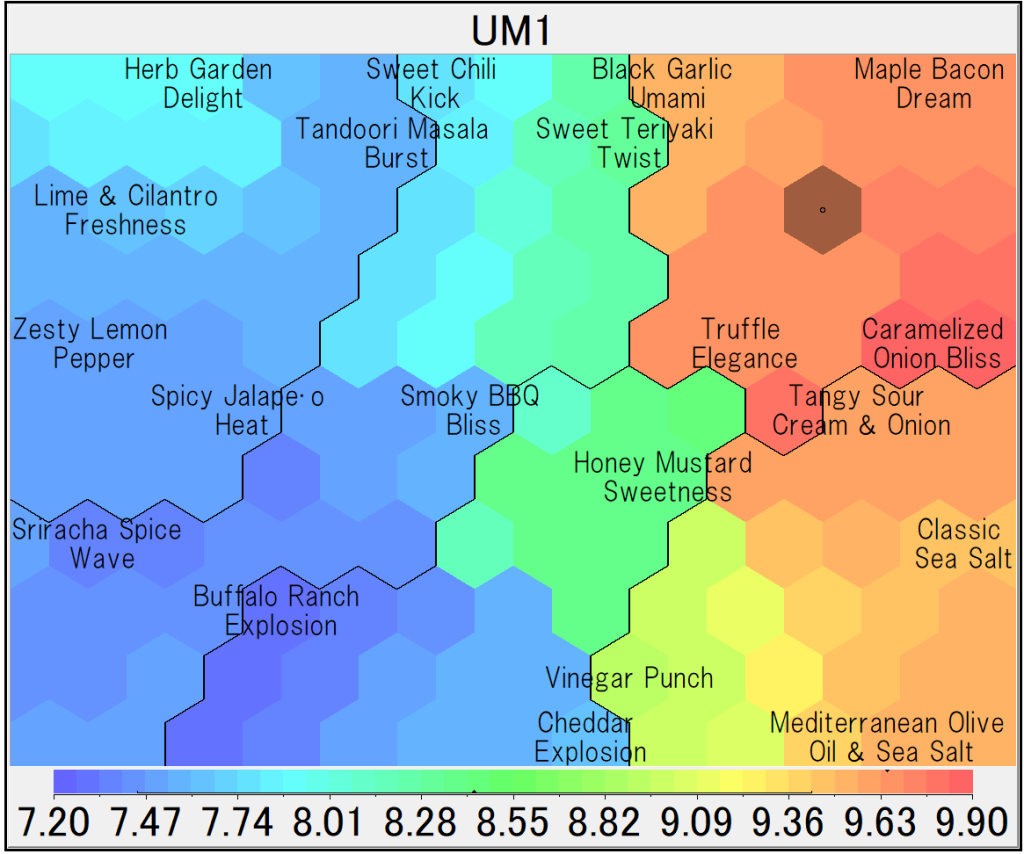

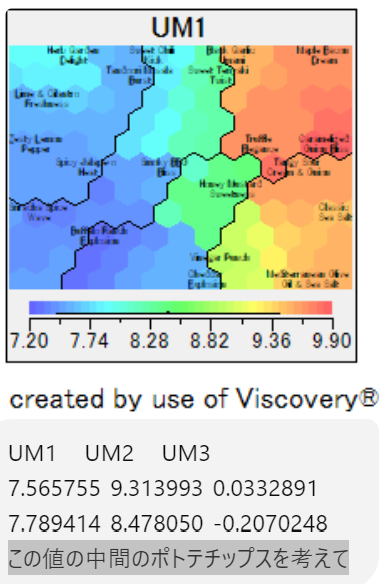

Be that as it may, the following is the result of converting the above text information of crisps into a vector, reducing it to three dimensions with UMAP and displaying it with SOM.

UM1, UM2 and UM3 are the new dimensions obtained by UMAP. This does not make sense, so we presented the whole .som file (som node values, labels and original text) to ChatGPT and asked them to ‘interpret UM1, UM2 and UM3 and convert them into terms that humans can understand’, which resulted in the following answer (If LLM does not accept .som files, export the map’s nodes to a text file):

To translate the abstract numerical values of UM1, UM2, and UM3 into more understandable concepts, we can interpret them as follows. Numerical maps generated by tools like Viscovery SOMine decompose and display the distribution and characteristics of elements along each axis.

UM1: Satisfaction / Richness

UM1 can be interpreted as representing overall consumer satisfaction or the richness of flavor.

- The higher the value, the richer and more complex the flavor profile, often with a sense of luxury or impact that consumers appreciate.

- Examples include flavors such as truffle or maple bacon, which tend to score high on this axis due to their richness and indulgent characteristics.

UM2: Balance / Depth of Layers

UM2 likely reflects the balance or depth of layers in the flavor profile.

- Higher values indicate a harmonious combination of multiple taste elements (sweetness, saltiness, acidity, umami) without monotony.

- Flavors such as herb-based or spice-enhanced varieties tend to score high on UM2, showcasing complexity and a well-rounded balance.

UM3: Freshness / Lightness

UM3 can be viewed as representing freshness or lightness in flavor.

- Positive values emphasize refreshing, citrusy, or herbaceous notes, along with light saltiness or acidity.

- Negative values indicate bolder, heavier elements, such as spiciness, smokiness, or richer sweetness and umami.

- Examples of flavors scoring low on UM3 include Smoky BBQ or garlic-based varieties, known for their intense and heavy profiles.

Summary

- UM1: Satisfaction / Flavor Richness

- Higher values indicate stronger flavor impact and greater satisfaction.

- UM2: Balance / Depth of Layers

- Higher values suggest greater flavor diversity and balance.

- UM3: Freshness / Lightness

- Positive: Fresh and light flavors (citrus or herb-based).

- Negative: Bold and intense flavors (sweet, smoky, or spicy).

By applying this interpretation to flavor evaluations and clustering results, it becomes easier to design products or identify improvement opportunities that align with consumer preferences and expectations.deliver a strong sense of satisfaction and depth in flavor. Overall, this represents a data point likely belonging to a popular cluster of flavors that balance multiple elements harmoniously.

end of quote

In this case, UMAP was used with 20 text cases, so only up to three dimensions were created. If the number of cases were several hundred, more dimensions could be created and a more detailed analysis could be carried out. Alternatively, using PCA, the number of dimensions can be increased (formally) to 20. You can imagine that it would be possible to carry out the kind of analysis that has traditionally been done with sensory evaluations.

It is surprising that LLMs are able to accurately interpret the resulting dimensional axes of so much dimensionality reduction in relation to the data. At this point, human cognitive abilities are far outstripped. It can be predicted that multivariate analyses such as conventional PCA can also be analysed more powerfully with the help of LLMs than with human interpretation.

Reasoning about new product concepts

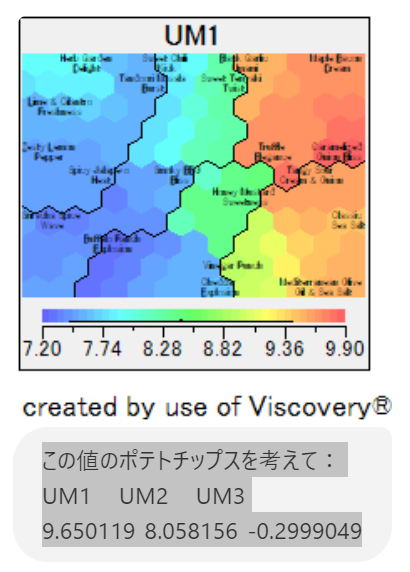

In addition, in the future, we will specify unlabelled places in the semantic space, i.e. spaces where no existing products exist, and analyse what fictitious products they correspond to. We get the node values (UM1, UM2 and UM3 values) from the dark shaded nodes in the map below and again ask the LMM: ‘Think of a crisp with this value: UM1 UM2 UM3 9.650119 8.058156 -0.2999049’:

The LLM responded as follows:

Proposed Flavor: Truffle Maple Fusion

Appearance

This chip features a luxurious golden-brown color with a subtle sheen. Fine black truffle powder is evenly dusted across the surface, complemented by a sparkle of caramelized maple sugar crystals, exuding a refined and indulgent feel.

Aroma

As soon as the bag opens, the earthy richness of black truffle fills the air, paired with the soft sweetness of maple. The aroma is reminiscent of an upscale French restaurant’s appetizer, offering a sophisticated and luxurious scent.

Texture

The first bite offers a satisfying crunch—sturdy yet delicately thin. The chips are evenly fried, delivering a balance of light crispiness and hearty texture.

Flavor

The initial taste is the gentle sweetness of maple sugar, which gradually gives way to the deep umami of black truffle and a hint of garlic. The complex layers of flavor unfold beautifully, balancing sweet and savory elements in perfect harmony. The combination creates a rich, multidimensional taste that lingers on the palate.

Aftertaste

The finish is defined by the truffle’s rich and earthy depth, while the sweetness subtly fades away, leaving a refined and clean impression. The lingering umami compels you to reach for another chip, creating an addictive snacking experience.

Flavor Concept

The “Truffle Maple Fusion” flavor is inspired by the high UM1 value (9.65), showcasing rich depth and elegant sweetness as its defining traits. With UM2 at 8.05, the flavor achieves a balance of complexity that remains engaging over multiple bites. The slightly negative UM3 (-0.30) suggests minimal tanginess or spiciness, allowing the richness and sweetness to take center stage.

This chip is perfect for consumers seeking to add a touch of luxury to their everyday snacks or those who crave a sophisticated and indulgent flavor experience.

end of quote

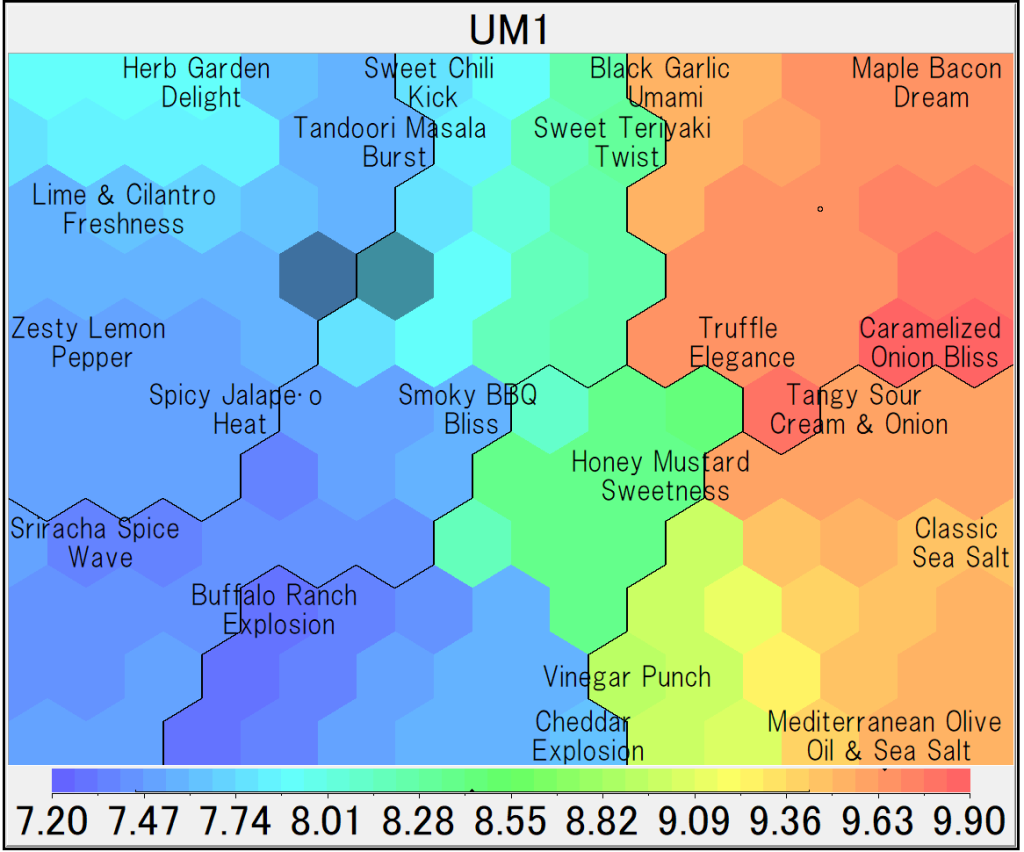

As far as the results are concerned, it can be seen that the concept is very elaborately derived from the node values. However, the results are also a simple intermediate result of the existing product, so let’s try one more time with another nodes. This time, instead of a single node, we selected two nodes across the cluster and asked the question ‘Think of a crisp of these intermediate values’:

Here is the results:

Values:

- UM1: 7.565755, UM2: 9.313993, UM3: 0.0332891

- UM1: 7.789414, UM2: 8.478050, UM3: -0.2070248

Midpoint Calculation:

- UM1 (7.565755 + 7.789414) / 2 = 7.6775845

- UM2 (9.313993 + 8.478050) / 2 = 8.8960215

- UM3 (0.0332891 – 0.2070248) / 2 = -0.08686785

Resulting Midpoint:

UM1: 7.6775845, UM2: 8.8960215, UM3: -0.08686785

Flavor Concept: Citrus Herb Harmony

Appearance

The chips have a golden-yellow base with subtle specks of green and white seasoning, symbolizing fresh herbs and a light citrusy touch. The visual appeal is clean, natural, and refreshing.

Aroma

Opening the bag reveals a vibrant blend of zesty lemon and lime with a fresh herbal undertone of parsley and basil. There’s a hint of saltiness in the air, creating an inviting balance between brightness and savoriness.

Texture

Each chip offers a light and airy crunch, crisp enough to satisfy but delicate enough to feel refined. The texture complements the flavor’s refreshing and uplifting profile.

Flavor

The initial taste is a bright, tangy zest of lemon and lime, which gives the palate a fresh and invigorating start. This is quickly followed by a soothing herbal medley, including parsley, chives, and a hint of basil. A light sprinkle of sea salt ties the flavors together, enhancing the natural potato base without overpowering the herbs and citrus.

Aftertaste

The aftertaste is clean and refreshing, with a lingering hint of lime zest and a subtle herbal warmth. The balance of tangy and savory elements makes it both invigorating and satisfying.

Flavor Concept Insights

- UM1 (~7.68): This value indicates a moderate but slightly lower richness or complexity, suggesting the chip should focus on being fresh and balanced rather than indulgent or heavy.

- UM2 (~8.90): The high UM2 reflects a flavor that is well-layered and appeals broadly, meaning the chip should have complexity while still feeling approachable.

- UM3 (~-0.09): The slight negative UM3 suggests that the chip should avoid excessive tanginess or spiciness but can still include a subtle tang from citrus.

Target Consumer

This flavor is perfect for those seeking a light and refreshing snack, ideal for pairing with sparkling drinks or as a palate cleanser. The balance of citrus and herbs offers an elegant, versatile flavor suitable for both casual and sophisticated settings.

end of quote

What do you think? I think this is a very sophisticated product plan, although I am sure it will probably be criticised by some professional sensory evaluators. In this case, we have taken a guess and selected the nodes, but in reality, you should define the new ‘space’ to be developed based on the consumer preference data for each product. In the next article, we will show you how to connect the consumer preference data with this analysis.

Mindware Research Institute offers consulting services to support the implementation of semantic data mining and sensory vector analysis as described above. We look forward to hearing from motivated companies.